WEBSCRAPER IOI SOFTWARE

UI isn't as good as Parsehub and OctoparseįMiner is another software very similar to Webharvy.Limited features compared to competition.

It's also complicated to implement complex logic compared to software like Parsehub or Octoparse. If you want to perform a large-scale scraping task,it can take long because you are limited by the number of CPU cores on your local computer. Webharvy is a good software for fast and simple scraping tasks. The difference is that you only pay for the software once, there isn't any monthly billing. It visual scraping feature allows you to define extraction rules just like Octoparse and Parsehub. WebHarvy is a desktop application that can scrape website locally (it runs on your computer, not on a cloud server).

Simply run the following : docker run -v ~/portia_projects:/app/data/projects:rw -p 9001:9001 scrapinghub/portia You can run it easily thanks to the docker image. Portia is a web application written in Python. This means it allows to create Scrapy spiders without a single line of code, with a visual tool. It's a visual abstraction layer on top of the great Scrapy framework. Portia is another great open source project from ScrapingHub.

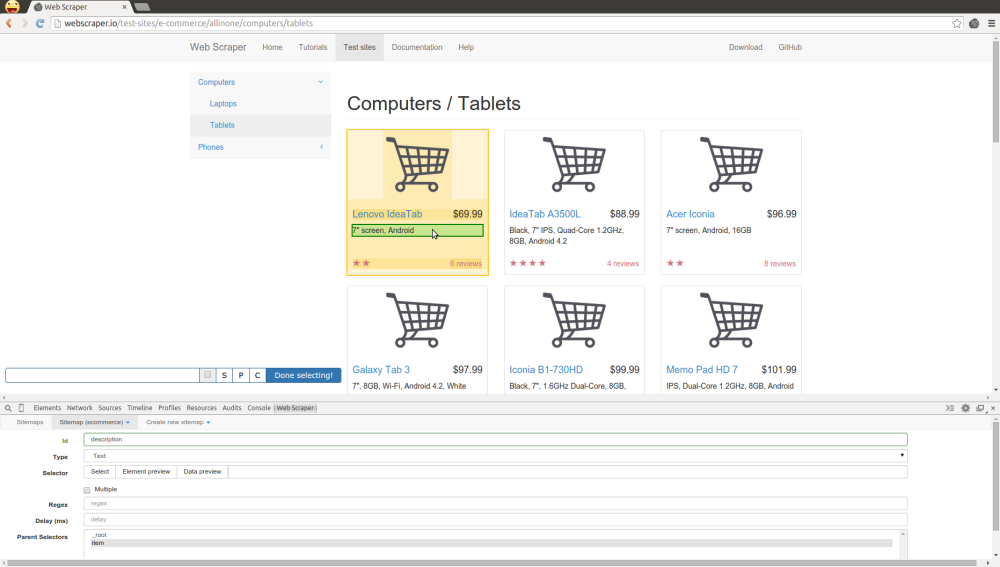

Generally Chrome extension are easier to use than desktop app like Octoparse or Parsehub, but lacks lots of feature.ĭataMiner fits right in the middle. What is unique about dataminer is that it has a lot of feature compared to other extension. When submitting a bug please attach an exported sitemap if possible.DataMiner is one of the most famous Chrome extension for webscraping (186k installation and counting). To use chrome headless do the following: const sitemap = // same as previous example Note that it will consume far more resources than jsdom and you need to have some native dependencies installed in the server. If that is your case, you can use chrome headless as a browser. However, it is not capable of executing js which might be a hindrance in some cases. As such it has no native dependencies and it is very lightweighted. This is a purely JS implementation of HTML. The sitemap depends on the actual DOM of github, so it might get outdatedĬonst options = // optional delay, pageLoadDelay and browserīy default webscraper-headless will open jsdom as a browser. visit github and retrieve last commit of all trending repo. const webscraper = require('web-scraper-headless') To use it as a library you need a sitemap, you can write it by hand, but the easiest way is to use the original extension to scrape and then click on "export sitemap". Submit bugs and suggest features on github-issues Headless mode Extract data from dynamic pages (JavaScript+AJAX)ĭocumentation and tutorials are available on webscraper.io webscraper.ioĪsk for help, submit bugs, suggest features on google-groups.Sitemaps and scraped data are stored in browsers local storage or in CouchDB.To use it as a library do npm i web-scraper-headless Features

WEBSCRAPER IOI INSTALL

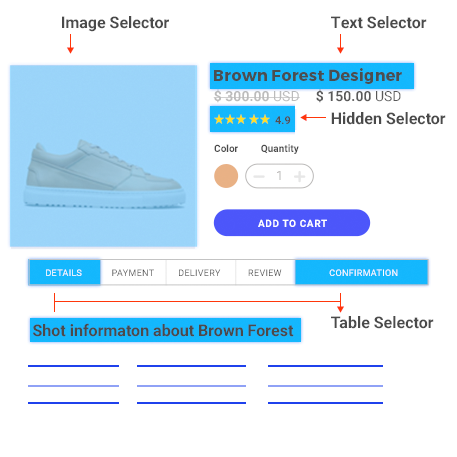

To use it as an extension install it from chrome-store Web Scraper will navigate the site accordingly and extract all data. Should be traversed and what should be extracted. Using this extension you can create a plan (sitemap) how a web site Web Scraper is a chrome browser extension and a library built for data extraction from web

0 kommentar(er)

0 kommentar(er)